On ChatGPT (and other LLMs)

At this point, it is hard to find anyone who hasn’t heard of the latest addition to auto-generative models: ChatGPT.

It is miraculous that we have such incredible advancements in only a short few years. In my opinion ChatGPT is already exceptional at what it does: it is an assistant that can be right or wrong, but it understands you and walks alongside you in figuring out things.

(If you are interested to learn more about LLMs, GPT and other underlying technologies, see links in references section of this post or/and visit the “awesome links” section of my site.

While there has been much discussion about its capabilities, strengths, and shortcomings in the past few months, there are two points that I believe are more prominent and less talked about than what the majority of sources have discussed.

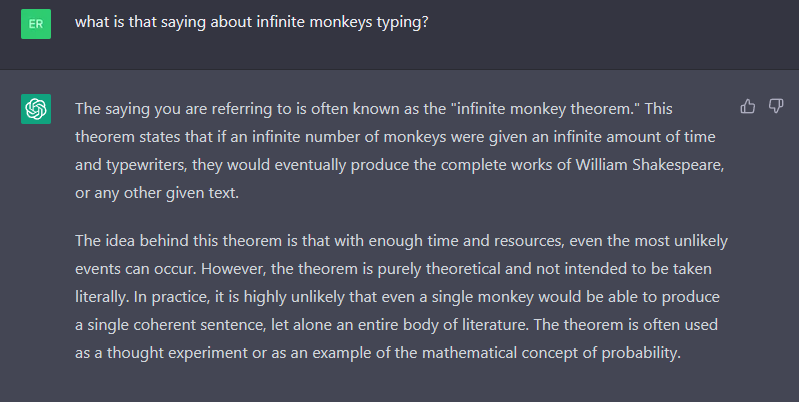

Infinite monkey theorem

You have probably heard of the “infinite monkey theorem“. If you haven’t ChatGPT provides a good explanation of the theorem in the text above.

As the name suggests, and the prompt and response above points out, this is (and has been) only a theorem. I partially disagree with this consensus though! As ChatGPT and other significant LLMs have shown and as they improve and grow will better show in the near future, infinite monkeys are actually behind ChatGPT, more accurately 175 billion monkeys!

We have also seen the exceptional performance of other tools created on the transformer technology with pictures and more recently for other modalities.

What piques my interest more than this fact alone is that this can be applicable to any other sequence of things and create more and more of that thing. I use “thing” here at its most generic meaning. In theory we should be able to train a sequence of any item, tangible enough to be formulated, and ask the machine to generate the next item in the sequence.

Large Thing Model instead of Large Language Model

Now on the second point. Imagine you like to create a robot which brings you a drink from the fridge. This simple task by itself involves a lot of different activities or tasks:

- go to the room’s door

- if it is closed open it

- move to top of the stairs

- go down the stairs

- find the path to the fridge

- go to the fridge

- open the fridge’s door

- identify the items available

- choose a drink based on some prioritization

- pick that drink in a safe manner

- move back to the room following the reverse of first 5 steps

now imagine that you want to expand the capabilities of your robot with all the routine activities which does not require your direct involvement. While each step of this process and each task is easy to do, the hardest part is connecting all the dots and create a routine for an end-to-end process.

On the other hand, technologies like ChatGPT are great at creating list of tasks which involves in performing an activity. for this specific example I asked it for what steps is required and here is the output:

Sure, here’s a step-by-step flow for you to get a drink from the fridge in the kitchen on the first floor and come back to your office on the second floor:

- Stand up from your chair and stretch your legs.

- Walk towards the door of your office.

- Open the door and walk out of the office.

- Locate the staircase or elevator that leads to the first floor.

- If you have to take the stairs, carefully walk down the stairs one step at a time, holding onto the railing if necessary. If you have to take the elevator, press the button to call the elevator and wait for it to arrive.

- Once you’ve reached the first floor, locate the kitchen where the fridge is located.

- Walk towards the fridge and open it.

- Look for the drink that you want and take it out of the fridge.

- Close the fridge door.

- If you need a glass or a straw, get it from the kitchen.

- Walk back towards the staircase or elevator.

- If you took the stairs down, carefully walk back up the stairs one step at a time, holding onto the railing if necessary. If you took the elevator down, press the button to call the elevator and wait for it to arrive.

- Once you’ve reached the second floor, walk back towards your office.

- Open the door to your office.

- Walk back to your desk and sit down.

- Enjoy your drink!

I think these steps are more thorough and well-writen than my listed steps!

To be able to create a machine, which providing the right hardware and medium of connecting it to a LTM or Large Thing Model has the potential to cover a large set of activities which can be automated and expanded without heavy lifting from the side of creator of such machines!

In my opinion, the next big step is not what we can do to advance natural language understanding, but how can the same technology be used for other challenges and how fast will we move toward a general LTM protocol?

Lastly

I wrote this post approximately a month ago (first post is scary!). Since then, the spectrum of AI and LLMs has gone through a major paradigm shift with new startups and use cases coming up every day. Interestingly, related to my second point, there has been a significant push to include multiple modalities in a single model. Top of the list for such efforts are Google’s new PaLM-E model and Microsoft’s Kosmos-1.

I am extremely excited for what the future holds and looking forward for all the advancements coming up soon!

References:

How GPT3 Works – Visualizations and Animations: A beautiful post by Jay Alammar, describing how GPT3 works with minimum technical complexity.

Stanford Webinar – GPT-3 & Beyond: A good look at what is next for LLMs, current players and trends.